Extreme Programming (XP), an agile software development methodology, is centred around collaboration, adaptability, and customer satisfaction. In the realm of software development, extreme programming practices is highly relevant due to its iterative nature, emphasizing continuous feedback and flexibility in responding to evolving requirements. It promotes practices like pair programming, continuous integration, and frequent releases, fostering high-quality, adaptable code.

In the context of Large Language Model (LLM) Application Development, extreme programming practices proves invaluable. LLM applications, which often involve complex language processing and evolving user needs, benefit from the iterative and client-focused approach of XP. The methodology ensures that LLM applications can quickly adapt to language structure changes, stay attuned to user feedback, and incorporate advancements in natural language understanding. XP’s emphasis on testing and collaboration is particularly crucial in developing robust and scalable LLM applications, contributing to their efficiency and effectiveness in processing and generating human-like language.

Developing Language Model (LLM) applications demands robust practices to ensure accuracy, efficiency, and adaptability. Given the complex nature of language processing, incorporating rigorous testing, continuous improvement, and agile methodologies is crucial. These practices safeguard against potential errors, enhance the model’s performance, and enable quick adaptation to evolving linguistic nuances and user requirements.

Role of Extreme Programming Practices in LLM Development

Extreme Programming (XP) seamlessly complements Large Language Model (LLM) development by fostering agility and responsiveness. XP’s iterative cycles, continuous feedback loops, and emphasis on adaptability align perfectly with the dynamic nature of language models.

In LLM development, where language nuances evolve and user expectations change, extreme programming practices ensures quick adjustments and efficient integration of improvements. Pair programming and continuous integration enhance code quality, contributing to the robustness of language models. By embracing extreme programming practices, LLM development teams can swiftly respond to linguistic shifts, implement user feedback, and deliver highly adaptable language processing solutions.

Testing Strategies for LLMs

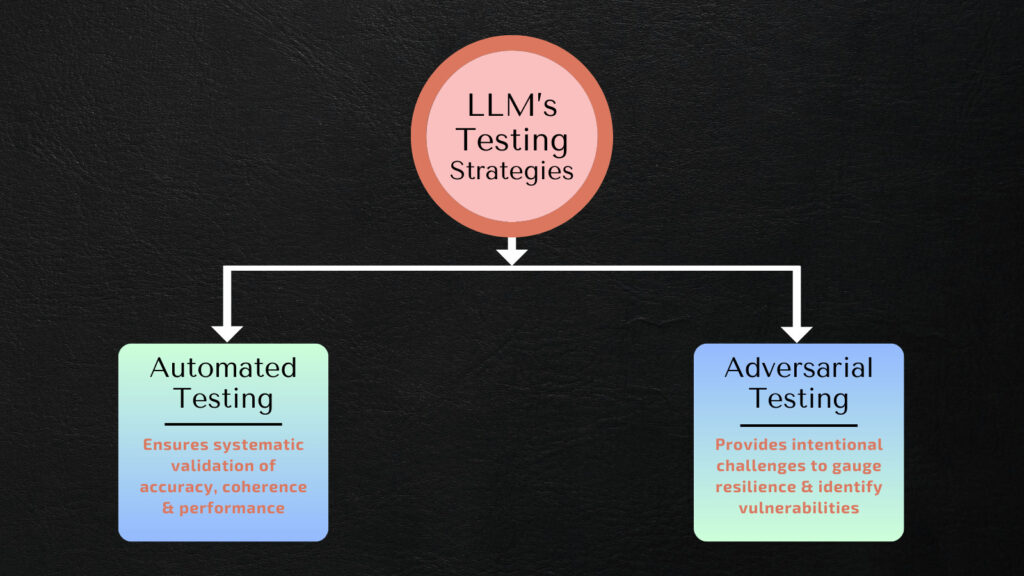

Testing Large Language Models (LLM) involves both automated and adversarial approaches. Automated testing ensures systematic validation of the model’s accuracy, coherence, and performance, offering a streamlined assessment. Simultaneously, adversarial testing introduces intentional challenges to gauge the model’s resilience and identify vulnerabilities.

This dual testing strategy enhances the robustness of LLMs, addressing potential shortcomings while ensuring their efficacy in handling diverse linguistic scenarios and unexpected inputs.

Example-Based Tests LLM’s Weaknesses

Automated testing is paramount for ensuring the reliability and accuracy of Large Language Models (LLMs). The complexity of language processing demands a systematic approach to validate the model’s behavior across various linguistic scenarios. Automated tests provide a quick and efficient means to evaluate LLMs, allowing developers to detect and address issues promptly, reducing the risk of deploying flawed models.

Example-based tests play a crucial role in LLM validation. By creating a diverse set of input examples representing different language structures, nuances, and topics, developers can assess how well the model responds and generates coherent outputs. This technique helps uncover potential weaknesses, refine the model’s understanding of context, and ensure its proficiency in handling a wide range of language inputs. Automated testing, particularly through example-based scenarios, not only enhances the reliability of LLMs but also accelerates the development process by identifying and resolving issues early in the cycle.

Auto-Evaluator Tests Validate LLM’s Behavior

Auto-evaluator tests, a variant of property-based testing, are instrumental in validating the behavior of Large Language Models (LLMs) by systematically exploring a broad range of inputs to ensure adherence to desired properties. Unlike traditional example-based tests, auto-evaluator tests focus on defining general properties or invariants that should hold true for various inputs, making them highly effective in uncovering harder-to-test properties within LLMs.

In the context of LLMs, which deal with intricate language structures, auto-evaluator tests excel at revealing nuanced and complex behaviors. By generating a diverse set of inputs automatically, these tests explore a more extensive space of potential language variations, helping identify subtle issues that may not be apparent with limited manual testing. Auto-evaluator tests enhance the robustness of LLMs by systematically probing their capabilities, providing a comprehensive validation approach that goes beyond typical scenarios and enables developers to uncover and rectify challenging-to-detect issues.

Securing LLM’s Inputs Against Adversarial Attacks

Large Language Models (LLMs) are susceptible to adversarial inputs, where deliberately crafted inputs may lead to unexpected or biased outputs. Adversarial attacks on LLMs exploit vulnerabilities in their training data, and even small alterations in input can result in significant changes in generated content. To address this, robust testing strategies are essential. Incorporating adversarial testing involves deliberately introducing challenging inputs, enabling developers to identify and rectify vulnerabilities. Additionally, diverse and representative training data, coupled with techniques like data augmentation, can enhance the model’s resilience to adversarial inputs.

Safeguarding LLMs against adversarial attacks also involves employing adversarial training during the model’s development phase. By training the model on a mix of standard and adversarially crafted examples, developers can enhance the model’s ability to handle unexpected inputs. Regularly updating the model and staying informed about emerging adversarial techniques are crucial aspects of maintaining the security of LLMs in real-world applications. Through a combination of rigorous testing, diverse training data, and ongoing vigilance, developers can fortify LLMs against potential adversarial challenges and ensure their reliability in diverse linguistic scenarios.

Refactoring for Sustainable LLMs

Refactoring is a pivotal practice in maintaining code quality, ensuring that software remains adaptable, scalable, and efficient over time. In the context of Large Language Model (LLM) applications, where complexity can be high, refactoring becomes even more crucial. Refactoring involves restructuring existing code without changing its external behavior, aiming to improve its readability, maintainability, and performance. It allows developers to eliminate redundancy, enhance code modularity, and address technical debt, ultimately promoting a more robust and sustainable codebase.

In LLM applications, there’s a distinction between LLM engineering and prompt engineering. LLM engineering focuses on refining the language model itself, improving its understanding, and enhancing its language generation capabilities. On the other hand, prompt engineering involves optimizing the input prompts given to the model to achieve desired outputs. Balancing both aspects is essential for achieving optimal results in LLM-based applications.

When it comes to refactoring LLM-based applications, several best practices can ensure code quality and maintainability. First and foremost, establish a modular architecture, breaking down complex functionalities into smaller, manageable components. Regularly review and update the codebase to incorporate improvements in LLM models or advancements in language processing. Implement thorough testing, including both automated and manual testing, to validate the impact of refactoring on the model’s performance. Lastly, document changes and rationale for refactoring to facilitate collaboration and future development.

In summary, refactoring is indispensable in the dynamic landscape of LLM-based applications. It not only enhances code quality but also enables developers to adapt to evolving language models and maintain a high standard of performance and reliability in their applications.

LLM Applications as biased models

Large Language Model (LLM) applications pose significant ethical considerations, necessitating a careful approach to ensure fairness, mitigate bias, and uphold responsible AI practices. One critical concern is fairness, as biased models may inadvertently perpetuate or exacerbate existing societal inequalities. Developers must rigorously assess training data, addressing underrepresentation and ensuring diversity to prevent biases in language generation.

To address bias, it’s essential to implement robust evaluation metrics and continually monitor model outputs for unintended prejudices. Adopting explainable AI techniques can enhance transparency, providing insights into how the model reaches decisions. Additionally, embracing responsible AI practices involves actively involving diverse stakeholders, obtaining consent for data usage, and prioritizing user privacy. Ethical considerations in LLM applications extend beyond technical aspects, requiring a holistic approach that incorporates social, cultural, and legal dimensions to ensure these powerful language models contribute positively to society without perpetuating harm or inequality.

Conclusion

Extreme Programming (XP) practices offer a robust framework for the development of Large Language Model (LLM) applications, ensuring their reliability and efficiency. The key XP practices for LLM application development include early and frequent feedback, promoting constant interaction between developers and users to refine the model’s behavior. Embracing sound software design principles, such as modularity and abstraction, contributes to creating a flexible and maintainable codebase, crucial in adapting to the intricate nature of language processing.

Additionally, extreme programming practices emphasizes the importance of simplicity, encouraging teams to follow engineering basics that save development time. This simplicity, coupled with automated testing, aids in identifying and rectifying issues swiftly, enhancing the overall productivity of LLM development teams. Adopting XP principles ensures an iterative and agile approach, allowing LLM applications to evolve seamlessly with changing linguistic nuances and user requirements. Teams should embrace these practices to build LLM-based solutions that not only meet high-quality standards but also exhibit adaptability, responsiveness, and efficiency in addressing the challenges of language processing.